Skip to main contentResource added

We collected demographic data and responses to two physiology questions which were then coded by experts and the computer model. Utilizing generalized linear models including fixed effects to control for potential discrepancies, we investigated relationships between demographic data and computer scoring accuracy.

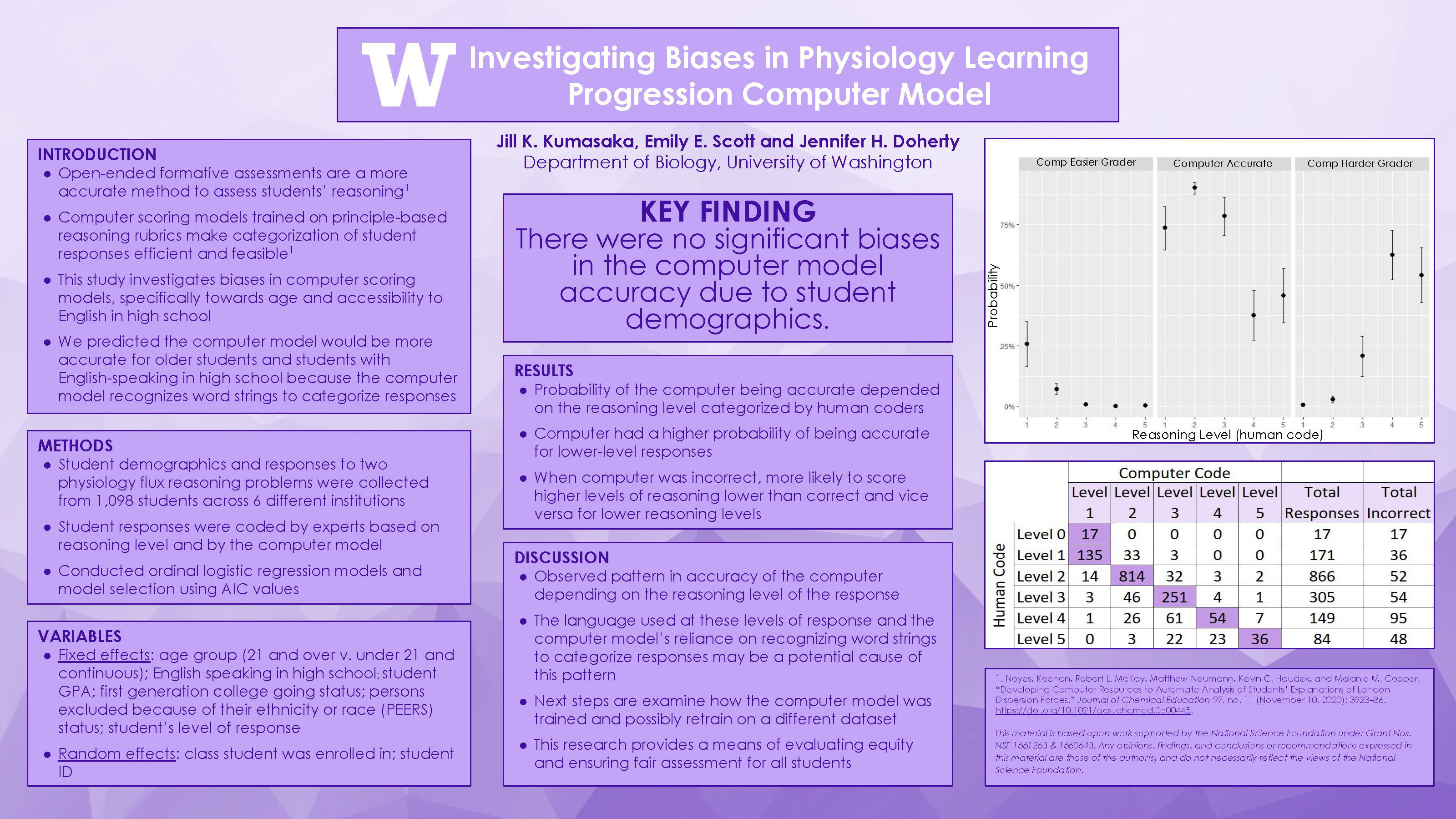

There were no significant differences in computer model accuracy for our demographics of interest. However, the probability of the computer being accurate depended on the reasoning level of the response as categorized by human coders. The computer had a higher probability of being accurate for lower-level responses. When examining how the computer scored if it was incorrect, we found the computer was more likely to score higher levels of reasoning lower than the human categorization and vice versa for lower reasoning levels.

While we did not identify any biases due to our demographics of interest, we observed a pattern in the accuracy of the computer depending on the reasoning level of the response. Our investigation provides essential feedback for computer model developers. Improving the computer model will more accurately measure students’ reasoning level and guide physiology education. Instructors from other disciplines will benefit from this research as it proposes a framework for better assessing student understanding.

Investigating Biases in Physiology Learning Progression Computer Models

Full description

Video Presentation

Authors:

- Jill Kumasaka, Biology, UW Seattle

- Emily Scott, Biology, UW Seattle

- Jennifer Doherty, Biology, UW Seattle

Abstract:

Multiple-choice assessments do not adequately gauge students’ understanding of principle-based reasoning in physiology. Open-ended formative assessments provide a more accurate method to assess students’ reasoning, however, large classrooms and short-staffing can limit their implementation. Utilizing machine learning to develop computer scoring models trained to score according to principle-based reasoning rubrics can make categorization of student responses efficient and feasible. However, potential biases in computer scoring models is yet uninvestigated. The aim of this study is to investigate biases, specifically towards age and accessibility to English in high school, by comparing demographic data with trends in computer and human code differences.We collected demographic data and responses to two physiology questions which were then coded by experts and the computer model. Utilizing generalized linear models including fixed effects to control for potential discrepancies, we investigated relationships between demographic data and computer scoring accuracy.

There were no significant differences in computer model accuracy for our demographics of interest. However, the probability of the computer being accurate depended on the reasoning level of the response as categorized by human coders. The computer had a higher probability of being accurate for lower-level responses. When examining how the computer scored if it was incorrect, we found the computer was more likely to score higher levels of reasoning lower than the human categorization and vice versa for lower reasoning levels.

While we did not identify any biases due to our demographics of interest, we observed a pattern in the accuracy of the computer depending on the reasoning level of the response. Our investigation provides essential feedback for computer model developers. Improving the computer model will more accurately measure students’ reasoning level and guide physiology education. Instructors from other disciplines will benefit from this research as it proposes a framework for better assessing student understanding.

Poster PDF

View a PDF version of the poster in Google Drive to enlarge the image or download a copy.

Comments

The presenter for this poster will be available to respond to comments during Poster Session 1 on April 20, 2:00-2:50 p.m.Comments

to view and add comments.

Annotations

No one has annotated a text with this resource yet.

- typeImage

- created on

- file formatjpg

- file size1 MB

- publisherUniversity of Washington

- rights