Skip to main contentResource added

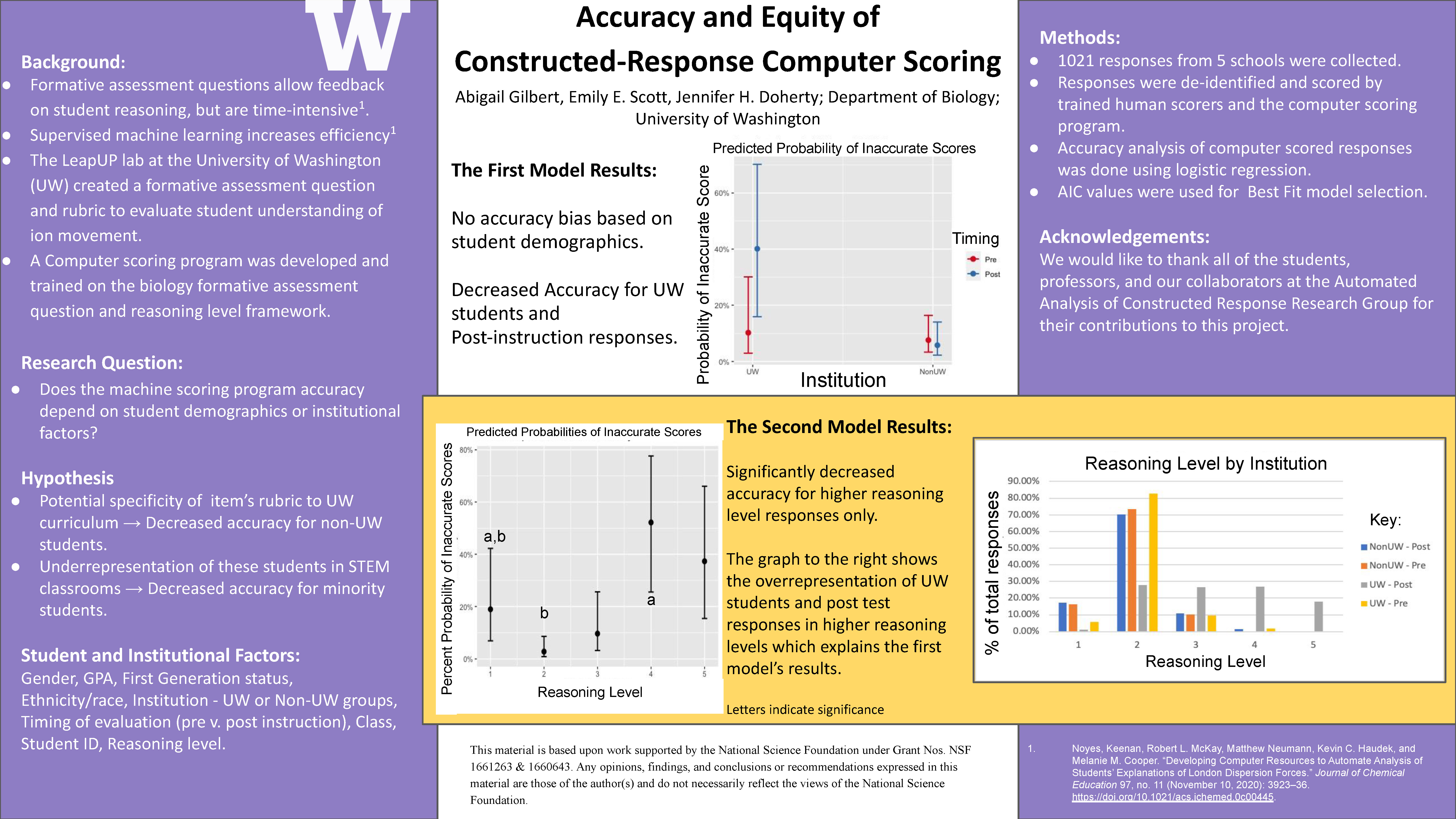

Constructed response short answer assessments provide greater insight into student understanding than multiple choice evaluation but involve time-intensive grading. To increase scoring efficiency, we worked with the Automated Analysis of Constructive Responses (AACR) Research Group to use supervised machine learning to generate a computer scoring program for a biology constructed response formative assessment question.

However, ensuring accurate and unbiased scoring is necessary before this technology enters classrooms. Due to underrepresentation of first-generation and minority students in STEM classrooms, and the assessment rubric’s potential specificity to University of Washington curriculum, we hypothesized a decreased scoring accuracy for responses from first-generation and minority students and those not attending the University of Washington.

Responses for the constructed response formative assessment question were collected from five institutions, including public universities and community colleges. Responses were scored by trained human scorers and the scoring program. Previous research found that this question, human-scored, shows no bias by student demographic (i.e., there is no differential item functioning). Responses were de-identified prior to human scoring, and human scores were reviewed by the supervising researcher before analysis. We analyzed the scoring program’s accuracy for dependence on students’ reasoning level, GPA, timing of assessment, university, first generation status, race or ethnicity, and gender, using logistic regression and model selection. Our analysis found no significant demographic or institutional bias in the scoring program. However, results did indicate a decreased computer scoring accuracy for higher-level reasoning scores (when students had more accurate responses to the question). For this assessment question and scoring program, our results indicate that further training of the program on higher-level responses is needed before scoring bias is eliminated. This bias-analysis research ensures the increased scoring efficiency offered by computer scoring programs does not come with an increase in bias in assessment.

Investigating the Accuracy of Constructed-Response Computer Scoring

Full description

Video Presentation

Authors:

- Abigail Gilbert, Department of Biology, UW Seattle

- Emily E. Scott, Biology, UW Seattle

- Jennifer H. Doherty, Biology, UW Seattle

Abstract:

Formative assessment questions allow feedback on student understanding, but involve time-intensive grading. Working with colleagues in Michigan State University’s AACR group, we increased the efficiency of scoring student responses to these questions by using supervised machine learning to generate a computer scoring program. However, ensuring scoring accuracy across student groups is necessary for equitable implementation of this technology in classrooms. We analyzed the scoring program’s accuracy for different demographic groups, and hypothesized a decreased scoring accuracy for first-generation and minoritized student responses, due to their underrepresentation in STEM classrooms, and increased accuracy for University of Washington students’ responses, due to a possible specificity of the scoring rubric to the University of Washington curriculum.Constructed response short answer assessments provide greater insight into student understanding than multiple choice evaluation but involve time-intensive grading. To increase scoring efficiency, we worked with the Automated Analysis of Constructive Responses (AACR) Research Group to use supervised machine learning to generate a computer scoring program for a biology constructed response formative assessment question.

However, ensuring accurate and unbiased scoring is necessary before this technology enters classrooms. Due to underrepresentation of first-generation and minority students in STEM classrooms, and the assessment rubric’s potential specificity to University of Washington curriculum, we hypothesized a decreased scoring accuracy for responses from first-generation and minority students and those not attending the University of Washington.

Responses for the constructed response formative assessment question were collected from five institutions, including public universities and community colleges. Responses were scored by trained human scorers and the scoring program. Previous research found that this question, human-scored, shows no bias by student demographic (i.e., there is no differential item functioning). Responses were de-identified prior to human scoring, and human scores were reviewed by the supervising researcher before analysis. We analyzed the scoring program’s accuracy for dependence on students’ reasoning level, GPA, timing of assessment, university, first generation status, race or ethnicity, and gender, using logistic regression and model selection. Our analysis found no significant demographic or institutional bias in the scoring program. However, results did indicate a decreased computer scoring accuracy for higher-level reasoning scores (when students had more accurate responses to the question). For this assessment question and scoring program, our results indicate that further training of the program on higher-level responses is needed before scoring bias is eliminated. This bias-analysis research ensures the increased scoring efficiency offered by computer scoring programs does not come with an increase in bias in assessment.

Poster PDF

View a PDF version of the poster in Google Drive to enlarge the image or download a copy.

Comments

The presenter for this poster will be available to respond to comments during Poster Session 2 on April 20, 3:45-4:30 p.m.Comments

to view and add comments.

Annotations

No one has annotated a text with this resource yet.

- typeImage

- created on

- file formatjpg

- file size3 MB

- publisherUniversity of Washington

- rights